Biography

| I am the founder of PlatformAI, dedicated to developing an AI agents platform that anticipates the advent of Artificial General Intelligence (AGI), superintelligence, and the singularity—an outlook I share with leading thinkers such as Ray Kurzweil, Elon Musk, Sam Altman, and Leopold Aschenbrenner. To ensure human safety amid rapidly advancing machine intelligence, we are creating the “Doctor of Truth,” a system designed to keep future AI agents aligned with human values. I earned my Ph.D. in June 2020 and subsequently served as a Research Fellow in Machine Learning Optics at Westlake University (October 2020–September 2021) before joining UCLA as a Postdoctoral Researcher in Machine Learning (October 2021–September 2023). |

Twitter

integrityyang@gmail.com |

Linkedin

GoogleScholar |

With a decade of research experience across a wide range of fields—reinforcement learning, deep learning, unsupervised and self-supervised learning, large language models, AGI, diffusion models, deep unfolding, multimodal optimization, graph neural networks, meta-learning, and advanced imaging techniques—I have authored over 20 publications in top-tier venues such as ECCV, IJCV, Optica, Physical Review Letters, and Physical Review Applied.

At PlatformAI , we are now focused on building a comprehensive ecosystem of AI influencers and system agents. Our offerings include 24/7 AI-powered teachers, a suite of AI tools (e.g., video editors, TTS systems, and image generators), as well as specialized computational imaging solutions for medical and industrial applications. Guided by the core principle of “SpiritAI”—ensuring that agents remain safe, aligned, and subservient to human direction—we envision a future in which humanity transitions from hands-on labor to orchestrating intelligent agents. These personal assistants, copilots, and even company-scale system agents will work under our oversight, fundamentally reshaping the ways we learn, innovate, and thrive.

Work Experience

CEO and Cofounder (Full Time)– PlatformAI, Los Angeles Apr. 2024-Now

- Led the machine learning AI influencer project.

- Led the automatic computational imaging research.

- https://www.platformai.org/

CEO and Cofounder (Half Time)– PlatformAI, Los Angeles Jun. 2023-Mar. 2024

- Led the agent chatting app (SpiritAI).

- Led the AI tools hub project

Machine Learning engineering – Workmagic, San Francisco Oct. 2023-Mar. 2024

Led the virtual tryon project.

- Made the web-UI tryon.

- Solved the collar and pants problem of tryon by training detectron2 to get segmentation of cloth.

- Trained controlnet to help tryon images get more details.

Led the diffusion-video project.

- Developed MagicAnimate, ensuring temporal consistency in animations.

- Explored DreamPose for fashion image-to-video synthesis using Stable Diffusion.

- Formulated an AI-driven video strategy for advertising applications.

Postdoctoral Scholar in machine learning – University of California, Los Angeles Oct. 2021-Sep. 2023

Led the Large Language Models (NLP) project.

- Built a DoctorBot based on OpenAI API, Langchain and Pinecone.

- Medical artificial general intelligence in radiology utilizing a multimodal GPT approach.

- Guided multi-model chain of thought reasoning GPT.

- Conducted prompt engineering with ChatGPT(3.5 and 4), Bard, Bing, AutoGPT, Dolly, and Vicuna.

- Applied LLM methodology to light field tomography (LIFT).

- Authored a comprehensive series on Large Language Models (LLMs).

Led the machine learning project for light field tomography (LIFT).

- Achieved practical LIFT reconstruction by combining diffusion models and fine-tuning.

- Improved LIFT reconstruction accuracy with GANs in deep unfolding.

- Developed unsupervised learning and meta learning for LIFT.

Led the hyperspectral imaging deep learning project.

- Built the practical reconstruction of hyperspectral imaging by embedding transformer and FastDVDnet into diffusion models based on optimization.

- Built a server platform by maintaining Linux systems and managing common Linux accounts based on SSH and VScode.

Skills: Cloud, Pytorch, TensorFlow, prompt engineering, fine-tuning, meta learning, unsupervised learning, GAN, diffusion model, optimization, 3D reconstruction, python, C/C++, Java, SQL.

Machine learning optics team leader– Westlake University Oct. 2020- Sep. 2021

- Established a machine learning optics team by recruiting PhD students and research assistants based on research background and interviews.

- Built a server platform by purchasing five servers with ten GPUs, collaborating with engineers, and maintaining Linux systems.

- Conducted reinforcement learning research.

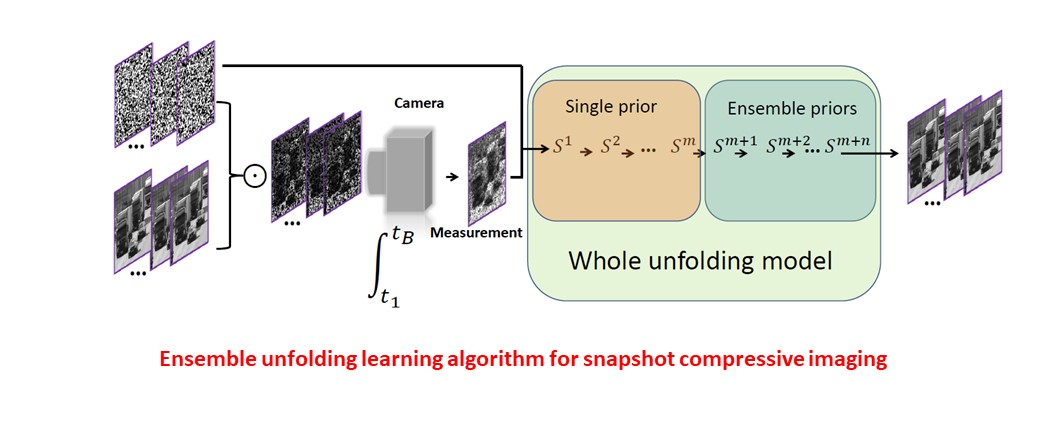

- Led the project on ensemble learning for snapshot compressive imaging.

- Achieved publication in ECCV 2022 by implementing scalable learning and high-speed strategies.

- Achieved publication in ECCV 2022 by implementing scalable learning and high-speed strategies.

- Led the project on play and plug for demosaicing snapshot compressive imaging.

- Pioneered the online training method to improve play and plug performance, published in the International Journal of Computer Vision (IJCV).

- Led the project on unsupervised learning for compressed ultrafast photography.

Skills: server, Pytorch, Tensorflow, GAN, reinforcement learning, diffusion model, optimization, deep unfolding, demosaicing, 3D reconstruction, unsupervised learning.

Computer Skills

- Operating system: Windows, Linux

- Programming language: Python, C, C++, Java, JavaScript, Matlab

- Deep learning framework: Pytorch, Tensorflow, Caffe, AWS, Azure

- Others: Next.js, React, Latex, Langchain, Pinecone, Docker, Sketchup, Hexo, AT89C51 single-chip computer

Publication

Medical artificial general intelligence in radiology utilizing a multimodal GPT approach (In progress 2023)

Chengshuai Yang, etc

Guided multi-modal Chain of Thought reasoning GPT (In progress 2023)

Chengshuai Yang, etc

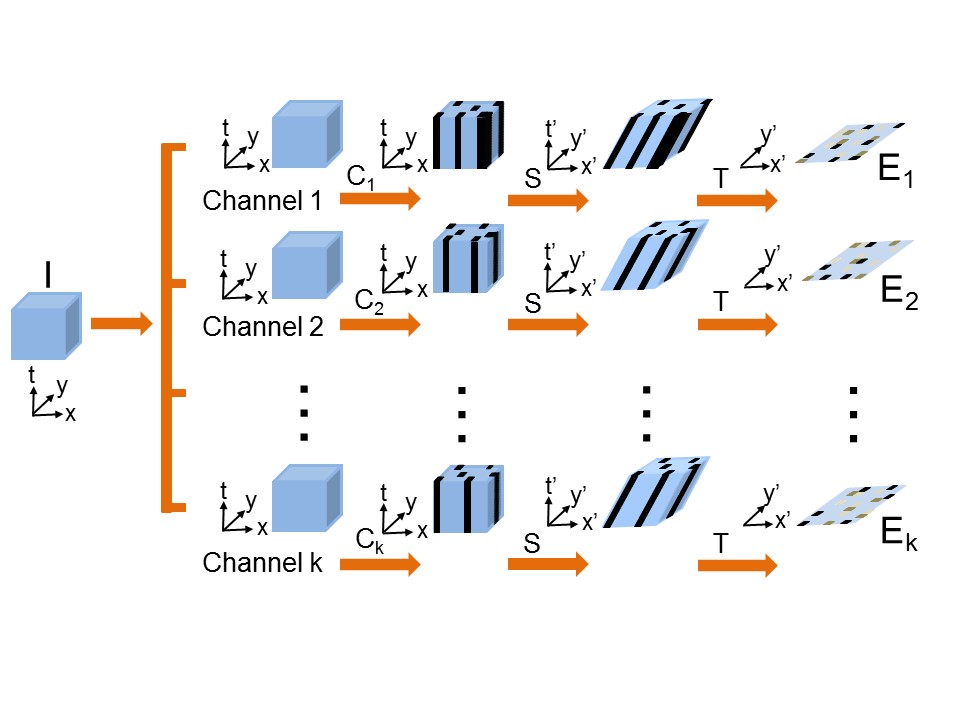

Ensemble learning priors unfolding for scalable Snapshot Compressive Sensing (In ECCV 2022) link github

Chengshuai Yang, Shiyu Zhang and Xin Yuan

Snapshot compressive imaging (SCI) can record the 3D information by a 2D measurement and from this 2D measurement to reconstruct the original 3D information by reconstruction algorithm. As we can see, the reconstruction algorithm plays a vital role in SCI. Recently, deep learning algorithm show its outstanding ability, outperforming the traditional algorithm. Therefore, to improve deep learning algorithm reconstruction accuracy is an inevitable topic for SCI. Besides, deep learning algorithms are usually limited by scalability, and a well trained model in general can not be applied to new systems if lacking the new training process. To address these problems, we develop the ensemble learning priors to further improve the reconstruction accuracy and propose the scalable learning to empower deep learning the scalability just like the traditional algorithm. What’s more, our algorithm has achieved the state-of-the-art results, outperforming existing algorithms. Extensive results on both simulation and real datasets demonstrate the superiority of our proposed algorithm.

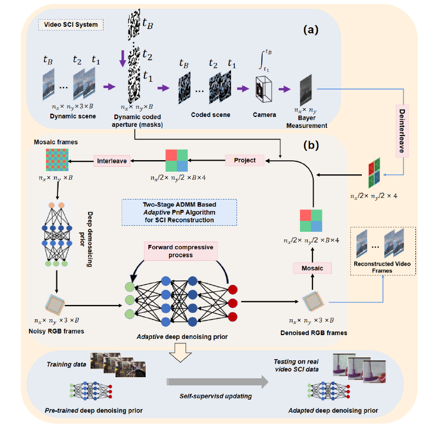

Adaptive Deep PnP Algorithm for Video SnapshotCompressive Imaging (International Journal of Computer Vision 2023:1-18) link github

Zongliang Wu#, Chengshuai Yang#, Xiongfei Su and Xin Yuan (#Co-first-author)

Video Snapshot compressive imaging (SCI) is a promising technique to capture high-speed videos, which transforms the imaging speed from the detector to mask modulating and only needs a single measurement to capture multiple frames. The algorithm to reconstruct high-speed frames from the measurement plays a vital role in SCI. In this paper, we consider the promising reconstruction algorithm framework, namely plug-and-play (PnP), which is flexible to the encoding process comparing with other deep learning networks. One drawback of existing PnP algorithms is that they use a pre-trained denoising network as a plugged prior while the training data of the network might be different from the task in real applications. Towards this end, in this work, we propose the online PnP algorithm which can adaptively update the network’s parameters within the PnP iteration; this makes the denoising network more applicable to the desired data in the SCI reconstruction. Furthermore, for color video imaging, RGB frames need to be recovered from Bayer pattern or named demosaicing in the camera pipeline. To address this challenge, we design a two-stage reconstruction framework to optimize these two coupled ill-posed problems and introduce a deep demosaicing prior specifically for video demosaicing which does not have much past works instead of using single image demosaicing networks. Extensive results on both simulation and real datasets verify the superiority of our adaptive deep PnP algorithm.

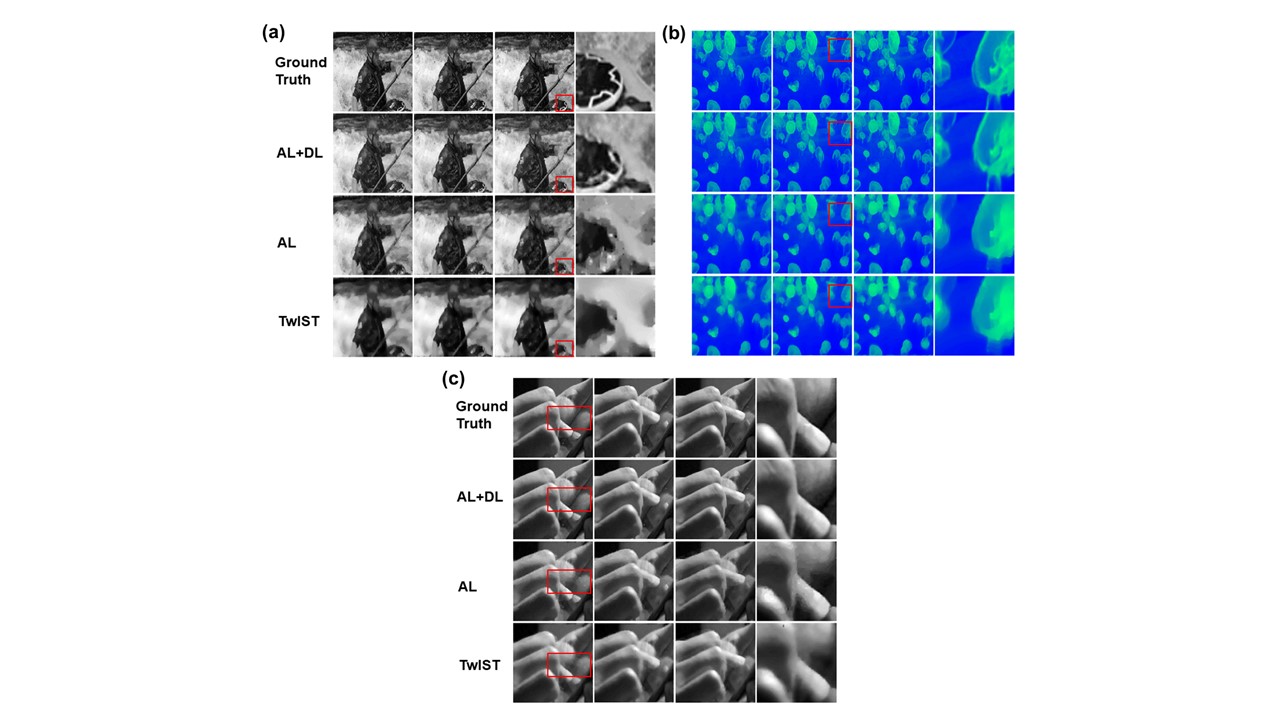

High-fidelity image reconstruction for compressed ultrafast photograhphy via augmented-lagrangian and deep-learning hybrid algorithm (Photonics Research 9(2) B30-B37 2021) link github

Chengshuai Yang, Yunhua Yao, Chengzhi Jin, Dalong Qi, Fengyan Cao, Yilin He, Jiali Yao, Pengpeng Ding, Liang Gao Tianqing Jia1 Jinyang Liang Zhenrong Sun1, and Shian Zhang

Compressed ultrafast photography (CUP), which is based on a three-dimensional (3D) image reconstruction method through the compressed sensing (CS) theory, has shown to be a powerful tool in recording self-luminous or non-repeatable ultrafast phenomena. However, the low image fidelity in the conventional augmented-Lagrangian (AL) and two-step iterative shrinkage/thresholding (TwIST) algorithms greatly limits the practical applications of CUP, especially for those ultrafast phenomena that need high spatial resolution. Here, we develop a novel AL and deep-learning (DL) hybrid (i.e., AL+DL) algorithm to realize high-fidelity image reconstruction for CUP. The AL+DL algorithm simplifies the mathematical architecture and optimizes the sparse domain and relevant iteration parameters via learning the dataset, and so it improves the image reconstruction accuracy. Our theoretical simulation and experimental results validate the superior performance of the AL+DL algorithm in the image fidelity over the conventional AL and TwIST algorithms, where peak signal to noise ratio (PSNR) and structural similarity index (SSIM) can be increased at least by 4 dB (or 9 dB) and 0.1 (or 0.05) for complex (or simple) dynamic scene, respectively. This study can promote the applications of CUP in related fields, and it will also enable a new strategy for recovering high-dimensional signals from low-dimensional detection. The codes of the AL+DL algorithm are available at https://github.com/integritynoble/ALDL-algorithm.

Label-free hyperspectral imaging and deep-learning prediction of retinal amyloid β-protein and phosphorylated tau (PNAS nexus. 2022 Sep;1(4):pgac164) link

Xiaoxi Du, Yosef Koronyo, Nazanin Mirzaei, Chengshuai Yang, Dieu-Trang Fuchs, Keith L Black, Maya Koronyo-Hamaoui, Liang Gao

Alzheimer’s disease (AD) is a major risk for the aging population. The pathological hallmarks of AD-an abnormal deposition of amyloid β-protein (Aβ) and phosphorylated tau (pTau)-have been demonstrated in the retinas of AD patients, including in prodromal patients with mild cognitive impairment (MCI). Aβ pathology, especially the accumulation of the amyloidogenic 42-residue long alloform (Aβ42), is considered an early and specific sign of AD, and together with tauopathy, confirms AD diagnosis. To visualize retinal Aβ and pTau, state-of-the-art methods use fluorescence. However, administering contrast agents complicates the imaging procedure. To address this problem from fundamentals, ex-vivo studies were performed to develop a label-free hyperspectral imaging method to detect the spectral signatures of Aβ42 and pS396-Tau, and predicted their abundance in retinal cross-sections. For the first time, we reported the spectral signature of pTau and demonstrated an accurate prediction of Aβ and pTau distribution powered by deep learning. We expect our finding will lay the groundwork for label-free detection of AD.

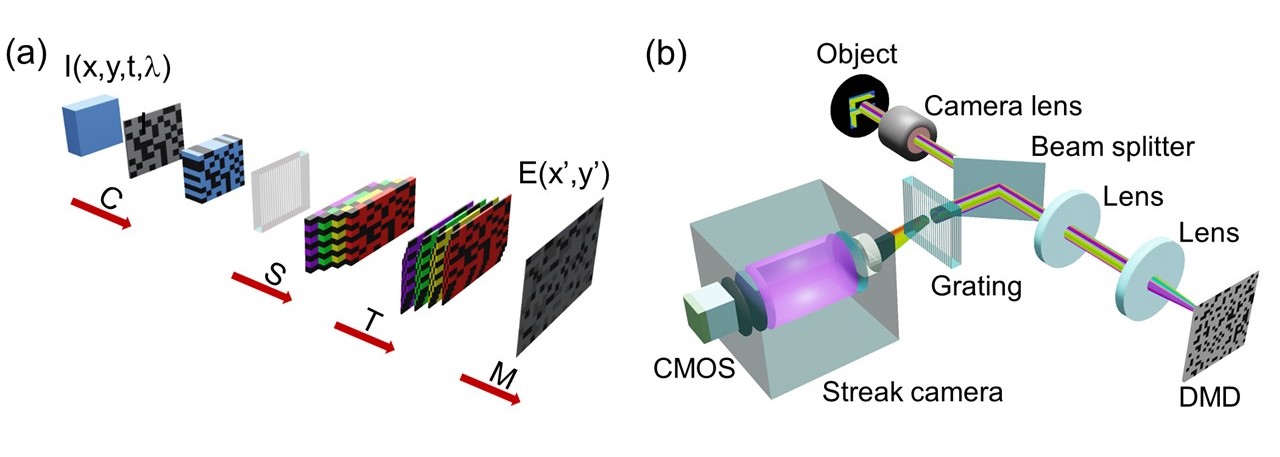

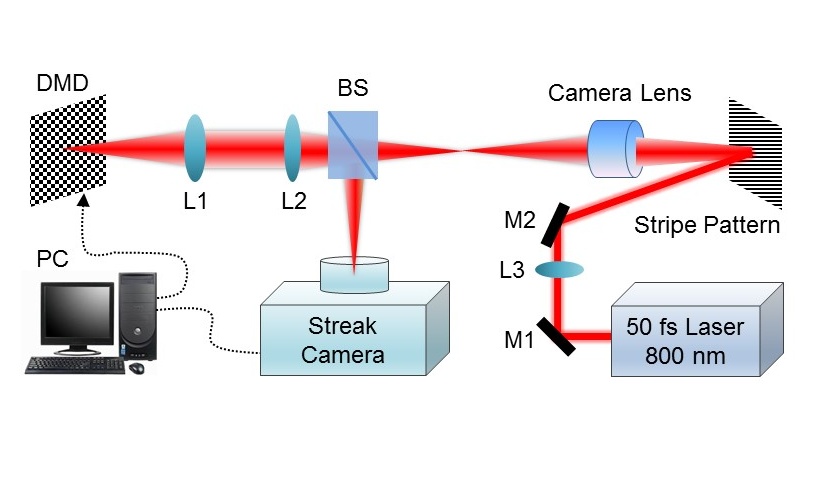

Hyperspectrally compressed ultrafast photography (Physical Review Letters, 124, 023902 (2020)) link

Chengshuai Yang, Fengyan Cao, Dalong Qi, Yilin He, Pengpeng Ding, Jiali Yao ,Tianqing Jia, Zhenrong Sun and Shian Zhang

The spatial, temporal and spectral information in optical imaging play a crucial role in exploring unknown world and unencrypting natural mystery. However, the existing optical imaging techniques can only acquire the spatio-temporal or spatio-spectral information of the object with single shot. Here, we develop a hyperspectrally compressed ultrafast photography (HCUP) that can simultaneously record the spatial, temporal and spectral information of the object. In our HCUP, the spatial resolution is 1.26 lp/mm in horizontal direction and 1.41 lp/mm in vertical direction, the temporal frame interval is 2 ps and the spectral frame interval is 1.72 nm. Moreover, HCUP operates with receive-only and single-shot modes, and therefore it overcomes the technical limitation of active illumination and can measure the non-repetitive or irreversible transient events. Using our HCUP, we successfully measure the spatio-temporal-spectral intensity evolution of the chirped picosecond laser pulse and the photoluminescence dynamics. This study extends the optical imaging from three- to four-dimensional information, which has an important scientific significance in both fundamental research and applied science.

Optimizing codes for compressed ultrafast photography by the genetic algorithm (Optica 5(2), 147-151 (2018))link

Chengshuai Yang, Dalong Qi, Xing Wang, Fengyan Cao, Yilin He, Wenlong Wen, Tianqing Jia, Jinshou Tian, Zhenrong Sun, Liang Gao, Shian Zhang, and Lihong V. Wang.

The compressed ultrafast photography (CUP) technique,providing the fastest receive-only camera so far, has shown to be a well-established tool to capture the ultrafast dynamical scene. This technique is based on random codes to encode and decode the ultrafast dynamical scene by a compressed sensing algorithm. The choice of random codes significantly affects the image reconstruction quality. Therefore, it is important to optimize the encoding codes. Here, we develop a new scheme to obtain the optimized codes by combining a genetic algorithm (GA) into the CUP technique. First, we measure the dynamical scene by the CUP system with random codes and obtain the dynamical scene image at each moment. Second, we use these reconstructed dynamical scene images as the optimization target and optimize the encoding codes based on the GA.Finally we utilize the optimized codes to recapture the dynamicalscene and improve the image reconstruction quality. We validate our optimization scheme by the numerical simulation of a moving double-semielliptical spot and the experimental demonstration of a time- and space-evolving pulsed laser spot.

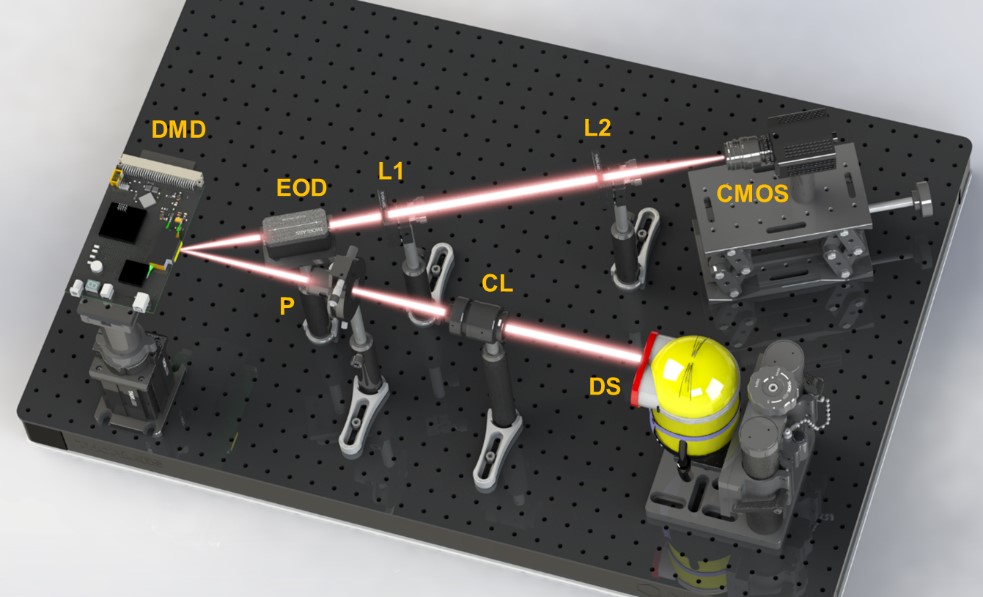

Single-shot and receive-only ultrafast electro-optical deflection imaging (Physical Review Applied, 13, 024001 (2020)) link

Chengshuai Yang, Dalong Qi, Fengyan Cao, Yilin He, Pengpeng Ding, Tianqing Jia, Shixiang Xu, Zhenrong Sun and Shian Zhang

Imaging ultrafast dynamic events has been a long-standing scientific goal. It is difficult for conventional electronic imaging sensors based on charge-coupled devices (CCD) or complementary metal-oxide semiconductors (CMOS) to capture dynamic processes occurring on the nanosecond or even shorter time scales due to the limitations of on-chip storage and electronic readout speed. Here, we developed a novel dynamical imaging technique with a very simple, compact configuration called ultrafast electro-optical deflection imaging (UEODI), which has a temporal resolution of up to 20 ps or an imaging speed of 5 1010 frames per second (fps), and therefore it enables observing nanosecond and even picosecond dynamic events. UEODI operates in a single-shot, receive-only mode, and thus it is highly beneficial for imaging non-repetitive (or irreversible) dynamic events and a variety of luminescent objects. Moreover, when combined with the time-of-flight (TOF) method, UEODI can detect three-dimensional (3D) objects. Using UEODI, we visualized a molecular photoluminescent process and measured a 3D ladder structure. Considering the capabilities of UEODI, it will have very importation application prospects in both basic research and applied science, including biomedical imaging.

Improving temporal and spatial resolutions of compressed ultrafast photography by using multi-encoding imaging (Laser Physics Letters 15(11), 116202 (2018)) link

Chengshuai Yang, Dalong Qi, Jinyang Liang, Xing Wang, Fengyan Cao, Yilin He, Xiaoping Ouyang, Baoqiang Zhu, Wenlong Wen, Tianqing Jia, Jinshou Tian, Liang Gao, Zhenrong Sun, Shian Zhang, and Lihong V. Wang

Imaging ultrafast dynamic scenes has been long pursued by scientists. As a two-dimensional dynamic imaging technique, compressed ultrafast photography (CUP) provides the fastest receive-only camera to capture transient events. This technique is based on three-dimensional image reconstruction by combining streak imaging with compressed sensing (CS). However, the image quality and the frame rate of CUP are limited by the CS-based image reconstruction algorithms and the inherent temporal and spatial resolutions of the streak camera. Here, we report a new method to improve the temporal and spatial resolutions of CUP. Our numerical simulation and experimental verification show that by using a multi-encoding imaging method, both the image quality and the frame rate of CUP can be significantly improved beyond the intrinsic technical parameters. Importantly, the temporal resolution by our scheme can break the limitation of the streak camera. Therefore, this new technology has potential benefits in many applications that require the ultrafast dynamic scene image with high temporal and spatial resolutions.

Improving the image reconstruction quality of compressed ultrafast photography via an augmented Lagrangian algorithm (Journal of Optics 035703,1-7 (2019)) link

Chengshuai Yang, Dalong Qi, Fengyan Cao, Yilin He, Xing Wang, Wenlong Wen, Jinshou Tian, Tianqing Jia, Zhenrong Sun and Shian Zhang

Compressed ultrafast photography (CUP) has been shown to be a powerful tool to measure ultrafast dynamic scenes. In previous studies, CUP used a two-step iterative shrinkage /thresholding (TwIST) algorithm to reconstruct three-dimensional image information. However,the image reconstruction quality greatly depended on the selection of the penalty parameter,which caused the reconstructed images to be unable to be correctly determined if the ultrafast dynamic scenes were unknown in advance. Here, we develop an augmented Lagrangian (AL) algorithm for the image reconstruction of CUP to overcome the limitation of the TwIST algorithm. Our numerical simulations and experimental results show that, compared to the TwIST algorithm, the AL algorithm is less dependent on the selection of the penalty parameter,and can obtain higher image reconstruction quality. This study solves the problem of the image reconstruction instability, which may further promote the practical applications of CUP.

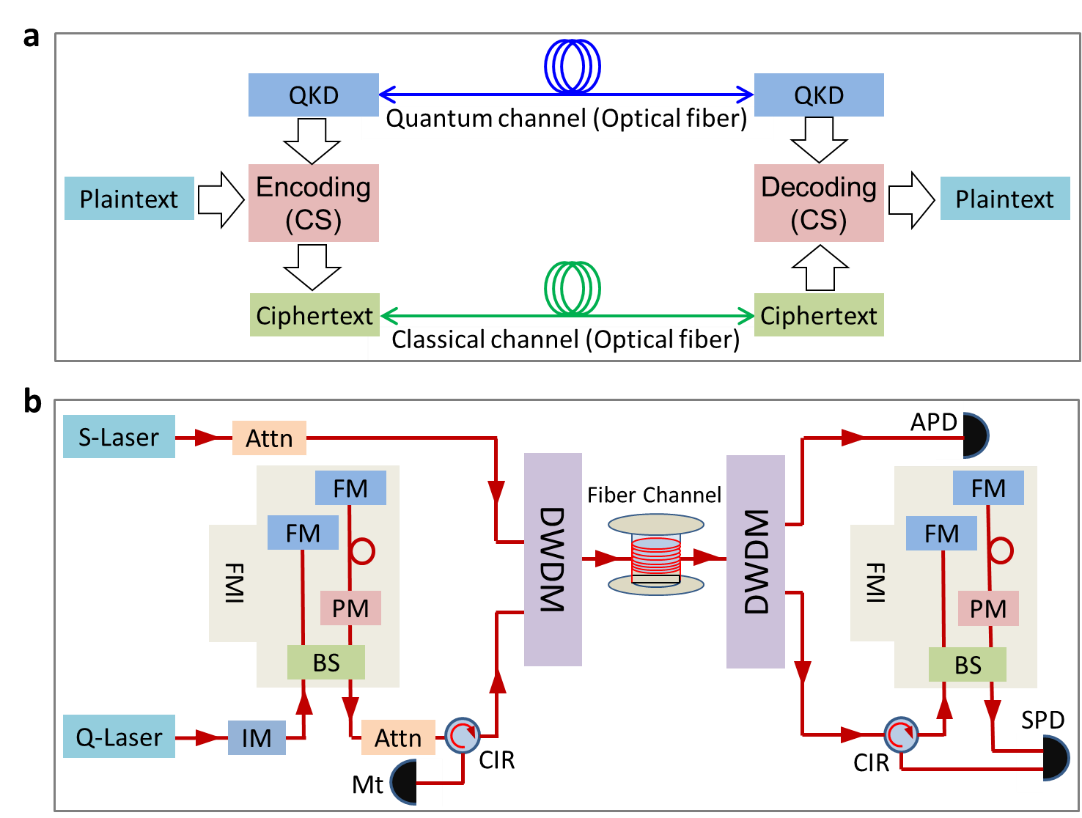

Compressed three-dimensional image information and communication security (Advanced Quantum Technologies 1800034, 1-8(2018)) link

Chengshuai Yang, Yuyang Ding, Jinyang Liang, Fengyan Cao, Dalong Qi, Tianqing Jia, Zhenrong Sun, Shian Zhang, Wei Chen, Zhenqiang Yin, Shuang Wang, Zhengfu Han, Guangcan Guo, and Lihong V. Wang

Ensuring information and communication security in military messages, government instructions, scientific experiments, as well as in personal data processing, is critical. In this study, a new hybrid classical–quantum cryptographic scheme to protect image information and communication security is developed by combining a quantum key distribution (QKD) and compressed sensing (CS). This method employs a QKD system to generate true random codes among the remote legitimate users and utilizes these random codes to encrypt and decrypt compressed 3D image information based on the CS algorithm. Therefore, this new technique can provide computational security in the image information transmission process by the encryption and decryption of CS algorithm, and the information and communication security can be evaluated in real time by monitoring the QKD system. Furthermore, this technique can directly transmit and reconstruct the compressed 3D image information based on the modified TwIST algorithm, and thus fewer random codes are required in QKD system, which can improve the information transmission bandwidth. Consequently, this technique not only provides a new application of a QKD system but also extends the CS-based image reconstruction from 2D to 3D. This study may open a new opportunity in the field of information and security communication.

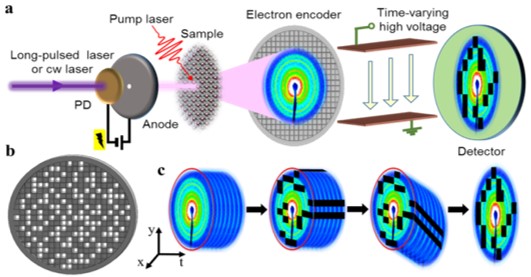

Compressed ultrafast electron diffraction imaging through electronic encoding (Physical Review Applied 054061(10),1-9 (2018)) link

Dalong Qi, Chengshuai Yang, Fengyan Cao, Jinyang Liang, Yilin He, Yan Yang, Tianqing Jia, Zhenrong Sun, and Shian Zhang

Ultrafast electron diffraction (UED) with high temporal and spatial resolutions is a powerful tool to observe transient structural changes in materials on an atomic scale. This technique is based on a pumpprobe method using ultrashort laser and electron pulses.Therefore,UED requires that the measured transients be highly repeatable. Moreover, the relative time jitter between laser and electron pulses significantly affects the UED temporal resolution. To overcome the UED technical limitations, we propose a technique called compressed ultrafast electron diffraction imaging (CUEDI). In this technique, we encode time-evolving electron diffraction patterns with random codes on an electron encoder. Then,the encoded electron diffraction pattern is measured by a detector after a temporal shearing operation. Finally, the evolution process of the electron diffraction pattern is reconstructed using a compressed sensing algorithm. We confirm the feasibility of our proposed scheme by numerically simulating the polycrystalline goldmelting process based on the experimental data measured with the pump-probe method. Because CUEDI employs a continuous or long electron pulse, the relative time jitter between laser and electron pulses can be eliminated. Additionally, CUEDI measures transients with a single shot, which allows irreversible processes to be directly observed.

Single-shot spatiotemporal intensity measurement of picosecond laser pulses with compressed ultrafast photography (Optics and Lasers in Engineering 116,89-93 (2019)) link

Fengyan Cao, Chengshuai Yang, Dalong Qi, Jiali Yao, ilin He, Xing Wang, Wenlong Wen, Jinshou Tian, Tianqing Jia, Zhenrong Sun, and Shian Zhang

The spatiotemporal measurement of the ultrashort laser pulses is of great significance in the diagnosis of the instrument performance and the exploration of the laser and matter interaction. In this work we report an advanced compressed ultrafast photography (CUP) technique to measure the spatiotemporal intensity distribution of the picosecond laser pulses with a single shot. This CUP technique is based on a three-dimensional image reconstruction strategy by employing the random codes to encode the space-time-evolving laser pulse and decode it based on a compressed sensing (CS) algorithm. In our CUP system, the measurable laser wavelength depends on the spectral response of the streak camera, which can cover a wide range from ultraviolet (200 nm) to near infrared (850 nm). Based on the CUP system we develop, we successfully measure the spatiotemporal intensity evolutions of some typical laser pulses, such as the 800 nm picosecond laser pulse, the 800 and 400 nm twocolor picosecond laser pulses and the supercontinuum picosecond laser pulse. These experimental results show that the CUP technique can well characterize the spatiotemporal intensity information of the picosecond laser pulses. Moreover, this technique has the remarkable advantages with the single shot measurement and without the reference laser pulse.

Single-shot compressed ultrafast photography: a review (Advanced Photonics, 2(1), 014003(2020)) link

Dalong Qi, Shian Zhang, Chengshuai Yang, Yilin He, Fengyan Cao, Jiali Yao, Pengpeng Ding, Liang Gao, Tianqing Jia, Zhenrong Sun, Jinyang Liang, and Lihong V.Wang

Compressed ultrafast photography (CUP) is a burgeoning single-shot computational imaging technique equipped withthat provides an imaging speed as high as 10 trillion frames per second and a sequence depth of up to a few hundred frames. This technique synergizes compressed sensing and the streak camera technique to capture non-repeatable ultrafast transient events with a single shot. With recent unprecedented technical developments and extensions of this methodology, it has been widely used in ultrafast optical imaging and metrology, ultrafast electron diffraction and microscopy, and information security protection. Here, we review the basic principles of CUP, its recent advances in image acquisition and reconstruction, its fusions with other modalities, and its unique applications in multiple research fields.

Journal Reviewer

Photonics Research: I am a reviewer for Pattern Recognition, ICCV, CVPR and Photonics Research.

Programming Experience

- Guided multi-modal Chain of Thought reasoning GPT. —-Mar. 2023-

- Developed and wrote the supervised diffusion model for hyperspectral imaging. —-Dec. 2022-Mar. 2023

- Developed and wrote diffusion model for light field photography. —- Aug. 2022-Nov2022

- Developed and wrote the Generative Adversarial Network (GAN) for light field photography. —-May. 2022- Jul. 2022

- Developed and wrote meta learning algorithm for light field photography. —-May. 2022-Jul.2022

- Developed and wrote the plug and play algorithm for Compressed ultrafast photography (CUP) —-Jan. 2022 -May. 2022

- Developed and wrote the deep unfolding algorithm for CUP FLIM. —-Oct. 2021-Jan. 2022

- Wrote the deep unfolding algorithm codes for video snapshot compressive imaging by python based on pytorch framework. —-April. 2021-Jul. 2021

- Wrote the deep reinforcement learning algorithm codes for choosing patch by python based on pytorch framework. —-Dec. 2020-Mar. 2021

- Wrote unsupervised learning algorithm codes for CUP. —-Jun. 2020- Dec. 2020

- Wrote the augmented lagrangian deep learning algorithm codes. —-Sep. 2019-Jan. 2020

- Developed and wrote codes for Hyperspectral CUP. —-Sep. 2019-Jan. 2020

- Made a GUI for CUP and wrote augmented lagrangian algorithm codes for CUP. —-Dec. 2018-Jan. 2019

- Wrote the program codes of controlling mouse and keyboard to reduce workload by python. —-Mar.2018- Apr. 2018

- Developed and wrote genetic algorithm codes for optimizing CUP. —- Mar. 2017- Jan. 2018

- Developed and wrote algorithm codes for compressed ultrafast electron diffraction imaging through electronic encoding. —- Jan. 2017- Oct. 2017

- Developed and wrote genetic algorithm codes for compressed three-dimensional image information and communication security. —- Oct. 2016- Apr. 2017

- Developed and wrote two color terahertz codes. —- Oct. 2015- Mar. 2016

- Wrote the program codes of wireless meter reading system in single chip computer by C and Visual Basic, which was my undergraduate thesis and won the first prize in Innovation Competition in Jiangsu Province —- Mar.2013- Mar.2015

- Wrote the program codes of smart home system in single chip computer by C, which won the third prize in Innovation Competition in Jiangsu Province —- Mar. 2013- Apr.2014

Rewards and Honors

- Outstanding Ph.D. in East China Normal University (ECNU). —- Jun. 2020

- Future Scientist Development Program in ECNU. —- Mar. 2019

- Academic Innovation Promotion Program for Excellent Doctoral Students in ECNU. —-Jul. 2018

- Outstanding undergraduate in Nantong University —-Jun. 2015

- Excellent Undergraduate Thesis in Nantong University —-Jun. 2015

- The First Class Scholarship for Undergraduate in Nantong University —-Oct. 2014

- Pacemaker to Merit Student in Nantong University —- Oct. 2014

- The First Prize of Undergraduate Students’ Physics and Experimental Technology Works

Innovation Competition in Jiangsu Province —-Nov. 2013 - Computer software level 3 in Jiangsu province —-Dec. 2013

- Level 2 Visual Basic of Computer science in Jiangsu Provincial Colleges and Universities —-Dec. 2012

- Level 2 C programming language of Computer science in National Colleges and Universities —-Sep. 2013

- Level 3 hardware of Computer science in Jiangsu Provincial Colleges and Universities —-May. 2013

- Level 3 Software of Computer science in Jiangsu Provincial Colleges and Universities —- Dec. 2013

- The First Prize of Advanced Mathematics Contest in Jiangsu Province —-Jul. 2012

Activities

- Bibles study in university cooperative housing association, Los Angeles. —-Jun.2022

- Future Scientist Development Program in ECNU. —-Mar. 2019-Jan. 2021

- Academic Innovation Promotion Program for Excellent Doctoral Students in ECNU. —-Jul. 2018-Jun. 2020

- College student training program based on single-chip computer and C programming language in Nantong University. —-Mar. 2013-June. 2014